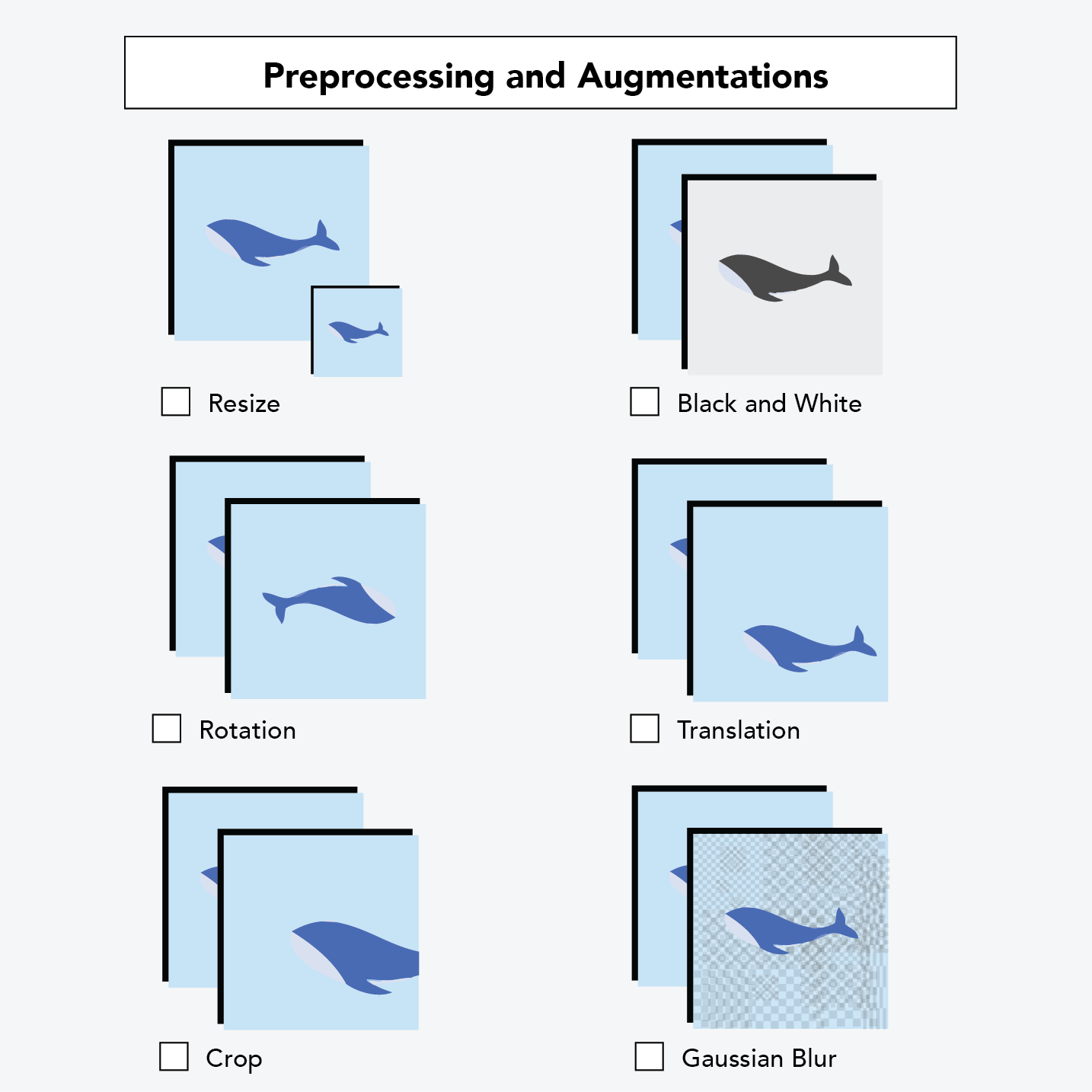

This post is part of a series of images on preprocessing and augmentation. See the first post outlining all others.

Images are nothing but numeric values ranging from 0 to 255 stored in neat arrays for our computer to display saturation. So where does color come from?

Fundamentally, color is generated from mixing red, green, and blue in various proportions. When an image is a color image, its stored digitally as an array of red values, blue values, and green values. Our computer "mixes" these on the fly to produce color outputs.

So when our neural network sees this, it does convolution on each of the red, green, and blue channels:

As you can see, this adds complexity to our calculation. As opposed to doing a single convolution on just one array, PyTorch must perform convolutions on three distinct arrays.

Grayscale images, on the other hand, only store values in a single array (black and white), meaning the above calculation only requires a single convolution to be calculated.

So, if grayscale is more computationally efficient*, perhaps a better question is when should we not use it as a preprocessing step for our machine learning models?

(*presumes images have been stored and fed to the model as single-channel images)

Our answer is fairly intuitive, but with nuance: when we believe color provides meaningful signal to a model and colors are fairly similar in their appearance, we should be cautious to use grayscale.

What does this look like in practice?

Imagine you're building a deep learning model in Keras to detect and classify chess pieces. Here, it is apparent color matters for our model: one player is black pieces and the other is white pieces. But we needn't be too concerned about applying grayscale as a preprocessing step. Even though color matters in this case, our colors of interest (black and white...), contain strong contrast. Showing our models grayscaled images will still enable it easily distinguish between black and white players.

Consider building a Tensorflow model to aid a self-driving car with lane detection. Here, color also matters: the middle of the road contains yellow lines, and the edges of the road contain white lines. In this case, we should be weary of applying grayscale. Because white and yellow are relatively similar (that is, low contrast colors), we should hesitate about grayscaling our images.

In sum, grayscale is most appropriate where color contrast itself is high.