This article was contributed to the Roboflow blog by Abirami Vina.

From tracking the movements of athletes to analyzing game strategies, there are many computer vision applications that can aid our collective understanding of various sports and player performance in individual games.

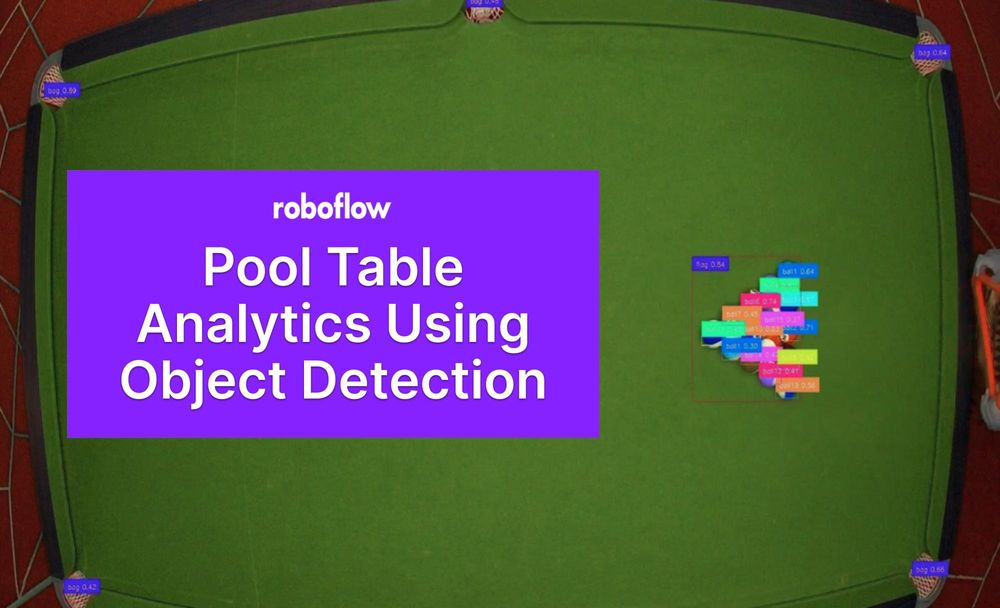

One particularly interesting application is billiards, a game that puts one’s precision, strategy, and skill to the test. Computer vision, with its capacity to analyze and interpret visual data, can become an invaluable ally in understanding the nuances of a game that demands nothing short of perfection.

In this guide, we’ll explore how object detection is reshaping the way pool is played and how you can try this out yourself. Let’s get started!

How can Object Detection Impact a Game of Pool?

Object detection can play a pivotal role in pool analytics by offering precise ball identification and real-time tracking capabilities. It can fundamentally change the way we experience a game of pool. Let’s look at it from different points of view.

Players

Using object detection to track ball movements and capture every ricochet and deflection can help players. Beyond automating scoring, this enables predictive analytics, which can be used to foretell ball trajectories with pinpoint precision. This predictive capability gives players an extra edge in strategy and execution during practice.

Above is an augmented reality system that applies computer vision to assist shot planning and execution in a game of billiards. Source.

Object detection also makes it possible to collect player-specific data, including shot accuracy, ball control, and strategic decision-making. These systems enable a comprehensive analysis of player performance. Object detection-based statistics can be used to generate player rankings and track progress over time. Players can go over their individual data to identify areas for improvement, refine their strategies, and fine-tune their skills.

Referees

Referees in pool games often face the challenge of detecting fouls, illegal shots, or ball collisions in real-time. Object detection systems equipped with high-speed cameras and sophisticated algorithms can step in to assist.

Rule violations, such as striking the cue ball twice or failing to hit the target ball first, can be picked up using computer vision techniques. With this in mind, object detection enhances the overall fairness of the game by providing referees more information to use in judging a call.

Spectators

Real-time object detection on pool tables offers broadcasters a wealth of visual assets to enhance the spectator experience. Television audiences can now witness the trajectory of balls, displayed as dynamic overlays on the screen.

These overlays not only add an element of excitement to the viewing experience but also provide insight into the player's strategy and shot execution. Predictive shot paths, generated by object detection algorithms, allow viewers to anticipate the outcome of each shot, heightening the drama of the game.

Applying Object Detection To a Game of Pool

In this section, we will use a pre-trained pool object detection model to analyze a video of a pool game. We are going to explore how to apply object detection, so this guide won’t go into the details of custom training a model to detect the various parts of a game of pool. To learn more about training a custom object detection model, check out our YOLOv8 custom training guide.

A Trained Object Detection Model

We’ll be using a pool object detection model available on Roboflow Universe that has already been trained. Roboflow Universe is a community that provides access to open-source datasets and pre-trained models tailored for computer vision, boasting a collection of over 200,000 datasets and 50,000 pre-trained models. Sign up for a Roboflow account and navigate to the model’s deployment page, as shown below.

This model has been trained to detect the different pockets, balls, and flag (starting) position of the balls. If you scroll down, you’ll see a piece of sample code that shows how to deploy the API for this model, as shown below.

Note down the model ID and version number from the third and fourth lines of the sample code. In this case, the model ID is “ball-qgqhv,” and it’s the sixth version of the model. This information will be useful for us when we put together our inference script.

Extracting Pool Table Analytics From Images

I’ve downloaded a select few images from the dataset associated with the trained model we are using to showcase extracting pool table analytics. You can do the same, use your own images, or download relevant images from the internet.

We’ll be using the Roboflow Inference Server, an HTTP microservice interface, to run our inference tasks. Roboflow Inference provides a Python library and Docker interface. We’ll be using the Python library as it's a lightweight option and well-suited for Python-focused projects.

Setting up Roboflow Inference

To install Roboflow Inference on a CPU device, run:

pip install inferenceTo install Roboflow Inference on a GPU device, run:

pip install inference-gpuDetecting the Start of a Game

We can detect when a game of pool has been set up using object detection predictions. Let’s walk through the code.

Step #1: Load the Trained Model

import numpy as np

import cv2

import base64

import io

from PIL import Image

from inference.core.data_models import ObjectDetectionInferenceRequest

from inference.models.yolov8.yolov8_object_detection import (

YOLOv8ObjectDetectionOnnxRoboflowInferenceModel,

)

model = YOLOv8ObjectDetectionOnnxRoboflowInferenceModel(

model_id="ball-qgqhv/6", device_id="my-pc",

#Replace ROBOFLOW_API_KEY with your Roboflow API Key

api_key="ROBOFLOW_API_KEY"

)Step #2: Run Inference on an Image

#read your input image from your local files

frame = cv2.imread("image1.jpg")

#initializing some flag variables that will come in handy later

cue_ball = 1

ball = 0

#converting the frames to base64

retval, buffer = cv2.imencode('.jpg', frame)

img_str = base64.b64encode(buffer)

request = ObjectDetectionInferenceRequest(

image={

"type": "base64",

"value": img_str,

},

confidence=0.3,

iou_threshold=0.3,

visualization_labels=True,

visualize_predictions = True

)

results = model.infer(request)

# Take in base64 string and return cv image

def stringToRGB(base64_string):

img = Image.open(io.BytesIO(base64_string))

opencv_img= cv2.cvtColor(np.array(img), cv2.COLOR_BGR2RGB)

return opencv_img

output_img = stringToRGB(results.visualization)

#saving the image with the predictions visualized

cv2.imwrite("output.jpg", output_img)The output image from our program with the predictions visualized is shown below.

Step #3: Check Predictions for a Flag and Cue Ball

for predictions in results.predictions:

#Checking for the presence of the flag detection

if (predictions.class_name == 'flag'):

print("Flag detected")

x = predictions.x

y = predictions.y

width = predictions.width

height = predictions.height

#getting the min and max coordinates of the flag's bounding box

xmin= int(x - width / 2)

xmax = int(x + width / 2)

ymin = int(y - height / 2)

ymax = int(y + height / 2)

for predictions in results.predictions:

print(predictions)

#checking the ball predictions

if ("ball" in predictions.class_name):

x_ball = predictions.x

y_ball = predictions.y

if (predictions.class_name=="ball0"):

print("Cue ball detected")

# checking that the cue ball is outside of the flag

if (x_ball < xmin) or (x_ball > xmax) or (y_ball<ymin) or (y_ball>ymax):

print("Cue ball outside of flag detection")

cue_ball =0

else:

#checking that the other balls are not outside of the flag

if (x_ball < xmin) or (x_ball > xmax) or (y_ball<ymin) or (y_ball>ymax):

print("Ball outside of flag detection, Game setup not ready")

ball = 1

#checking that all conditions are satisfied

if ball ==0 and cue_ball ==0:

print("Start Position of Game Detected")

Here is a screenshot of the output:

Detecting Ball Collisions

Next, we will detect ball collisions: when two pool balls hit each other.

We can detect when two balls have collided by checking for the intersection of bounding boxes using the following code (the code from the first two steps above can be reused for this):

# a brute force method

def check_box_overlap(box1, box2):

x1_1, y1_1, x2_1, y2_1 = box1

x1_2, y1_2, x2_2, y2_2 = box2

if x2_1 < x1_2 or x2_2 < x1_1:

return False

if y2_1 < y1_2 or y2_2 < y1_1:

return False

return True

collided = []

for predictions in results.predictions:

if ("ball" in predictions.class_name):

x = predictions.x

y = predictions.y

width = predictions.width

height = predictions.height

ball1 = predictions.class_name

x1_1= int(x - width / 2)

x2_1 = int(x + width / 2)

y1_1 = int(y - height / 2)

y2_1 = int(y + height / 2)

for predictions in results.predictions:

if ("ball" in predictions.class_name):

x_1 = predictions.x

y_1 = predictions.y

width_2 = predictions.width

height_2 = predictions.height

ball2 = predictions.class_name

x1_2= int(x_1 - width_2 / 2)

x2_2 = int(x_1 + width_2 / 2)

y1_2 = int(y_1 - height_2 / 2)

y2_2 = int(y_1 + height_2 / 2)

if check_box_overlap((x1_1, y1_1, x2_1, y2_1),(x1_2, y1_2, x2_2, y2_2)) == True:

#ensure these bounding boxes have not already been considered

if (ball1!=ball2) and (ball1 not in collided) and (ball2 not in collided):

print(ball1," and ", ball2," have collided.")

collided.append(ball1)

collided.append(ball2)

break

The terminal output below notes which balls have collided:

The image output shows the state of the game:

Detect a Ball Being Pocketed

We can detect when a ball has been pocketed by checking for the intersection of bounding boxes, as shown in the code above. The only difference would be checking for the intersection of objects detected as bags (pockets) and balls.

One could argue that balls hit the pocket and bounce back out, so how can we say the ball was pocketed solely based on the intersection of bounding boxes? Ideally, when analyzing a game of pool, we would have all the frames of the game, so we can check the previous and next frames to ensure the ball was indeed pocketed. This is where tracking the objects on the pool table becomes very useful.

Conclusion

In this guide, we went over applying object detection to a game of pool. We saw how it opens up a whole world of analytics related to the game.

Players can benefit from predictive analytics, giving them an edge in learning strategy and thus helping them to improve their performance. Referees can use pool analytics systems as a reliable tool for fair play. Spectators enjoy an enhanced viewing experience with dynamic overlays and predictive shot paths