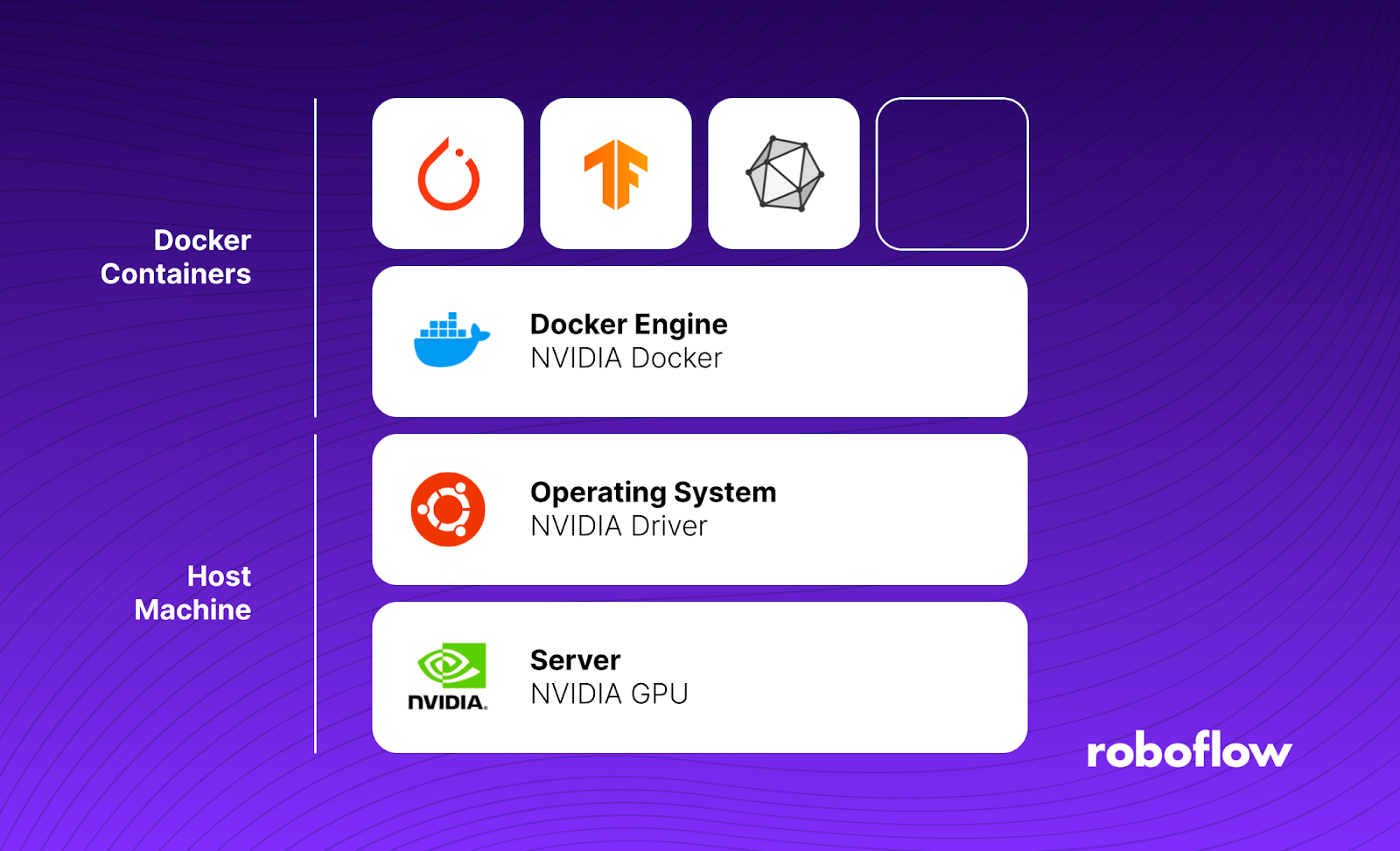

The Roboflow Inference server is a drop-in replacement for the Hosted Inference API that can be deployed on your own hardware. We have optimized the Inference Server to get maximum performance from the NVIDIA Jetson line of edge-AI devices. We have done this by specifically tailoring the drivers, libraries, and binaries specifically to its CPU and GPU architectures.

This blog post provides a comprehensive guide to deploying Open AI's CLIP model at the edge using a Jetson Orin powered by Jetpack 5.1.1. The process makes use of Roboflow's open source inference server, utilizing this GitHub repository for seamless implementation.

What is CLIP? What Can I Do with CLIP?

CLIP is an open source vision model developed by OpenAI. CLIP allows you to generate text and image embeddings. These embeddings encode semantic information about text and images which you can use for a wide variety of computer vision tasks.

Here are a few tasks you can complete with CLIP model:

- Identify the similarity between two images or frames in a video;

- Identify the similarity between a text prompt and an image (i.e. identify if "train track" is representative of the contents of an image);

- Auto-label images for an image classification model;

- Build a semantic image search engine;

- Collect images that are similar to a specified text prompt for use in training a model;

- And more!

By deploying CLIP on a Jetson, you can use CLIP on the edge for your application needs.

Flash Jetson Device

Ensure your Jetson is flashed with Jetpack 5.1.1. To do so, first download the Jetson Orin Nano Developer Kit SD Card image from the JetPack SDK Page, and then write the image to your microSD card with the NVIDIA instructions.

Once you power on and boot the Jetson Nano, you can check Jetpack 5.1.1 is installed with this repository

git clone https://github.com/jetsonhacks/jetsonUtilities.git

cd jetsonUtilities

python jetsonInfo.pyRun the Roboflow Inference Docker Container

Roboflow Inference comes with Docker configurations for a range of devices and environments. There is a Docker container specifically for use with NVIDIA Jetsons. You can learn more about Roboflow's Inference Docker Image build, pull and run in our documentation.

Follow the steps below to run the Jetson Orin Docker container with Jetpack 5.1.1.

git clone https://github.com/roboflow/inference

docker build \ -f dockerfiles/Dockerfile.onnx.jetson.5.1.1 \ -t roboflow/roboflow-inference-server-trt-jetson-5.1.1 sudo docker run --privileged --net=host --runtime=nvidia --mount source=roboflow,target=/tmp/cache -e NUM_WORKERS=1 roboflow/roboflow-inference-server-trt-jetson-5.1.1:latest

How to Use CLIP on the Roboflow Inference Server

This code makes a POST request to OpenAI's CLIP model on the edge and returns the image's embedding vectors. Pass in the image URL, Roboflow API key, and the base URL which connects to the Docker container running Jetpack 5.1.1 (by default http://localhost:9001):

import requests

dataset_id = "soccer-players-5fuqs"

version_id = "1"

image_url = "https://source.roboflow.com/pwYAXv9BTpqLyFfgQoPZ/u48G0UpWfk8giSw7wrU8/original.jpg"

#Replace ROBOFLOW_API_KEY with your Roboflow API Key

api_key = "ROBOFLOW_API_KEY"

#Define Request Payload

infer_clip_payload = {

#Images can be provided as urls or as bas64 encoded strings

"image": {

"type": "url",

"value": image_url,

},

}

# Define inference server url (localhost:9001, infer.roboflow.com, etc.)

base_url = f"http://localhost:9001"

# Define your Roboflow API Key

api_key = <YOUR API KEY HERE>

res = requests.post(

f"{base_url}/clip/embed_image?api_key={api_key}",

json=infer_clip_payload,

)

embeddings = res.json()['embeddings']

print(embeddings)Compute Image Similarity with CLIP Embeddings

After creating the embedding vectors for multiple images, you can calculate the cosine similarity of the embeddings and return a similarity score:

# Function to calculate similarity between embeddings

def calculate_similarity(embedding1, embedding2):

# Define your similarity metric here

# You can use cosine similarity, Euclidean distance, etc.

cosine_similarity = np.dot(embedding1, embedding2) / (np.linalg.norm(embedding1) * np.linalg.norm(embedding2))

similarity_percentage = (cosine_similarity + 1) / 2 * 100

return similarity_percentage

embedding_similarity = calculate_similarity(embedding1,embedding2)With CLIP on the edge, you can embed images, text, and compute similarity scores!